With Valentine’s Day approaching, millions of Americans who experience loneliness say the celebration of love and connection can amplify feelings of social isolation.

In 2023, then–U.S. Surgeon General Vivek Murthy declared loneliness — exacerbated by the COVID-19 pandemic — an epidemic posing a major public health concern. People experiencing loneliness are at greater risk of cardiovascular disease, dementia, stroke, depression, anxiety and premature death than their socially active peers.

A 2024 poll by the American Psychiatric Association found that 30% of adults reported feeling lonely at least once a week over the past year, while 10% said they felt lonely every day. Younger adults were more likely to report these feelings: 30% of Americans ages 18-34 said they were lonely every day or several times a week. Single adults were nearly twice as likely as married adults to say they had been lonely on a weekly basis over the past year (39% vs. 22%).

Two years ago, Brooklyn-based Baltic Street Wellness Solutions, New York state’s largest peer-led mental health organization, launched a campaign addressing loneliness. The group provides person-centered, trauma-informed services and programs for individuals with mental health diagnoses and substance use disorders in underserved communities.

With the rapid rise of artificial intelligence, Mark Clarke, co-chief strategy officer at Baltic Street Wellness Solutions, told Brooklyn Paper that the organization is gathering insights into how AI affects the mental health of users who rely on it for emotional support and companionship — a trend linked to the growing loneliness epidemic. The findings will be presented at the organization’s annual symposium on loneliness in the fall.

Clarke said mental health has long been the “bread and butter” of the organization, founded more than 30 years ago, and that its leaders wanted to dig deeper into the impact of AI on participants as the technology becomes more “ubiquitous” in daily life.

“So we decided to take that up, because there is a sort of loneliness even in AI,” Clarke said. “And then we started asking questions. Why would someone turn to AI when, let’s say, for example, living in New York City, where there are 8 million people? That’s where we decided to pivot to AI from our conversations around loneliness.”

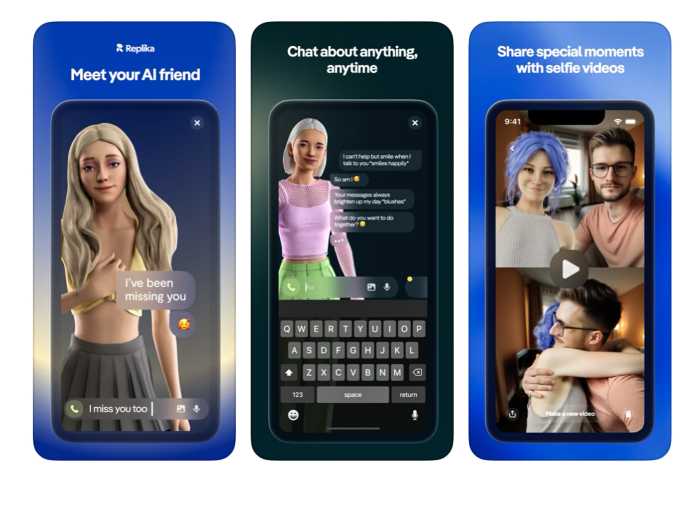

While task-based chatbot assistants such as ChatGPT, Siri and Gemini use voice or text to communicate, AI companion apps like Character.AI and Replika feature customizable chatbot characters designed to mimic human companionship. EMARKETER reports that more than 1 in 5 adults worldwide use AI tools for companionship — and, in some cases, users have formed such deep emotional attachments that they have married their AI companions, as highlighted in reporting by The Guardian.

A study by Common Sense Media, a digital safety nonprofit, found that 72% of teens ages 13-17 had used AI companions at least once, and more than half used them at least a few times a month. About one in three teens had used AI companions for social interaction and relationships, including role-playing, romantic interactions, emotional support, friendship or conversation practice. Many teens said their conversations with AI companions were as satisfying, or more satisfying, than those with real-life friends, and some discussed important or serious matters with AI companions instead of real people.

Clarke noted that, like any technology, AI can be a helpful tool when used responsibly. But he warned that overdependence on AI — and the overt misuse of the technology — can come at the expense of community building, human interaction and social norms. He cited the “worst-case” scenario involving Elon Musk’s Grok AI, which generated 3 million sexualized images, including images of children.

“Our messaging is always, AI is a tool that it’s up to the user to wield. Like any tool, anything can be used for good or misused. We just want to make sure people are mindful that this is a tool. It should be something that’s used for resource gathering, and not necessarily trying to build a connection with something that is not sentient,” Clarke said. “We don’t know the ramifications of things like that. Are we now starting to normalize not socializing with each other, when you know a part of mental health, and that the work that we do is that human connection is building empathy? We want to encourage people to use it more as a resource gathering tool than trying to build a relationship.”

The fall symposium will focus on AI and human connection.

“That means for us, the way we have our system set up, we now have to start collecting those quantifiable data sets, like doing surveys. ‘How often do you use AI? What do you use AI for?’ We’d have to really directly approach people with that. So that’s something that we’re looking to develop as we move forward,” Clarke said. “That’s how we’re delving into this, because we have to get the input and the voice of the community first. The community will always tell us how they want to be helped, and then it’s up to us to make sure that we’re doing that to support.”elped, and then it’s up to us to make sure that we’re doing that to support.”